Thresholds of Change: Why Didn’t Green Chemistry Happen Sooner?

Technology’s Stories vol. 3, no. 1 – doi: 10.15763/JOU.TS.2015.4.1.02

It is comparatively simple to sketch an historical overview of the chemical industry in Europe and the United States across the twentieth century. The identification after 1900 of naturally occurring vitamins and hormones in the body, followed by the development of novel synthetic chemicals, set the stage for the development of the modern industry in the first few decades of the century. With expanded war production during World War I, the U.S. chemical industry began to catch up with the Europeans. In the interwar period, research quickened as novel synthetic compounds were developed for a variety of uses, including plastics, pesticides, and pharmaceuticals. Following World War II, production expanded dramatically using growing supplies of petroleum as a feedstock. This expanded industry finally aroused broad public and regulatory concerns that went beyond workplace hazards to include consumer exposures through pesticide residues, packaging materials, and food additives.[1]

While the U.S. government had sought greater control of air and water pollution from factory emissions in the first three decades of the twentieth century, targeting the production of specific, harmful chemicals lagged, as the owners of chemical companies aggressively sought to limit regulation of their activities.[2] At this time, the science of toxicology was funded by chemical manufacturers, and its practitioners took for granted that safe levels of workplace exposure could be defined for economically important chemicals.[3] While the 1906 Pure Food and Drug Act regulated adulterated and mislabeled drugs, it wasn’t until the 1938 Federal Food, Drug, and Cosmetic Act, implemented in response to 105 poisoning deaths associated with the medicine Elixir Sulfanilamide, that the Food and Drug Administration (FDA) was given the ability to regulate the safety of drugs. In addition to regulating drugs, the 1938 act banned poisonous substances from food. However, the new law incorporated industrial toxicology’s belief that “the dose makes the poison” to the extent that it established a regulatory approach mandating acceptable tolerance levels for “unavoidable” poisonous substances in foods. The 1958 amendment to the law required premarket testing of medicines and new food additives, restricted unsafe levels of harmful chemicals, and banned carcinogens in food outright.[4] Congressional hearings on the safety of new plastics, fertilizers and other chemicals led to the 1958 bill. Soon thereafter, publication of Rachel Carson’s Silent Spring (1962) raised further concern with the health and environmental effects of chemicals. Still, U.S. chemical manufacturers were big business by this time, with sufficient power to resist new regulatory efforts. Moreover, provisions and applications of the 1958 bill were shaped by pressure from manufacturing associations, most notably in allowing use of chemicals known to be toxic under the assurance that safe levels of exposure could be established and regulated.

In this paper, I explore the following counterfactual question: could twentieth-century chemical synthesis and production have proceeded in a way that caused less damage to human health and the environment? The option of pursuing safer chemicals was precluded, in part, as the result of technical and regulatory assumptions that regulation could and should proceed by identifying safe and unsafe levels of chemicals, rather than distinguishing between safe and unsafe chemicals tout court. These industrial chemicals could have been developed with less harmful properties for physiological and ecological health, in particular as the result of adoption of a research program to design chemicals that were “benign by design” (in the words of contemporary “green chemists” Paul Anastas and John Warner) rather than the path that firms and regulators chose: designing toxic chemicals with an eye to safe levels of use.

Counterfactuals in the History of Technology

Anastas and Warner claim that twentieth-century chemistry took a wrong turn in targeting safe levels of toxic chemicals – a fateful step that could have been avoided. They hold that the Paracelsian view that “the dose makes the poison” served as an excuse to justify inaction: “At some point when one is dealing with substances of high toxicity, unknown toxicity, carcinogenicity, or chronic toxicity, it becomes problematic, if not impossible, to set appropriate levels that are tolerable to human health and the environment.”[5] Anastas and Warner envision an alternative path that chemistry could have pursued, a greener approach to chemistry that would have avoided many of the harms associated with twentieth-century “brown chemistry.”[6]

Fig. 1: The Valley of the Drums. A toxic waste site in Bullitt County, Kentucky. A 1979 cleanup effort by the EPA was invoked by proponents of the 1980 Comprehensive Environmental Response, Compensation, and Liability Act, known as the Superfund Act. By Environmental Protection Agency [public domain], via Wikimedia Commons.

Addressing counterfactual possibilities requires addressing two questions: 1) whether a change is insertible in real history: that is, whether it could have happened given plausible changes in history as it occurred, and 2) whether a postulated change would have led to a branching path in history or would have been compensated for by substitute causes. The latter requires examination of second-order counterfactuals to see whether consequences of the proposed counterfactual would have reinforced a new historical trajectory or have been compensated for by other causes reverting historical development to the actual path it took (or one close to it). Such amplifying or reversionary second-order counterfactuals can lead to either underdetermination (a branching path hinges on the postulated change) or overdetermination (the postulated change is insufficient to overcome other causes of the trajectory as it occurred).[8]

Underdetermination versus Overdetermination

The tacit emphasis on underdetermination by constructivist historians of science and technology was first made evident in Shapin and Schaffer’s argument that the political climate in Restoration England favored Boyle’s experimental program over Hobbes’s deductive science modeled on geometry. Given a more favorable political climate for Hobbes, not impossible given his role as a tutor of Charles II, science could have been institutionalized in a way that discounted experimentalism. However, given the multiple ways that even those pursuing a mathematical, deductive approach incorporated experimental approaches, it seems unlikely that Charles II institutionalizing Hobbes’ approach would have significantly derailed the momentum that experiment had already attained. Even if insertible in history, the counterfactual of political legitimation for Hobbes’ approach would have been swamped by reversionary second-order counterfactuals, rather than amplifying ones.[9]

Historians prone to emphasizing underdetermination focus on contingency (Hobbes vs. Boyle), without explaining where this alternative branching point would have led. In the extreme case, believing that contingency runs rife in history makes it difficult to see how an alternative, Hobbesian science could be sustained, as new contingencies make historical change open and changeable at every moment.[10] Alternatively, many historians believe that even if a counterfactual event could have occurred, the historical context includes a multitude of reasons why the same historical outcome would result no matter what, as multiple causes shape any historical trajectory, not single events. In the extreme case, this view reflects the “essay question” approach to history, where one gets credit for providing the most comprehensive list of causes for a big historical event, like the French Revolution or World War I. Instead, I will argue for a more balanced perspective on historical contingency, one that highlights the role of historical contingency while also delineating how likely it was that alternative outcomes could have been sustained in practice.

Could non-toxic, or less toxic, chemicals have been produced in the twentieth century? I aim to show that there existed developed, alternative approaches to understanding the safety of hazardous substances in a number of fields. These alternatives emphasized eliminating inherent toxic substances on the assumption that low levels of toxic substances likely remained harmful, a view called the per se standard. By contrast, the dominant approach sought to identify safe levels of any substance on the assumption that “the solution to pollution is dilution,” a view called the de minimus standard. In essence, one could seek to distinguish toxic from benign substances, or one could identify safe and toxic levels of any substance.

The latter view won out, with partial exceptions to be discussed, but the arguments for the former were (and remain) compelling, so that understanding how a different outcome was possible is well supported by historical documentation. At the same time, science does not operate in isolation from larger societal forces. In the context of powerful institutional support for the toxicologists’ de minimus standard, the postulation that the eclipsing of the per se standard was a near run thing within science must be supplemented by the recognition that an alternative scientific trajectory would not be sustainable without countervailing public pressure and alternative sources of financial support.[11]

Hindsight Bias and the Social Construction of Technology

Methodological developments on the use of counterfactuals in recent political history have tried to counter the “hindsight bias” identified by experimental psychologists. After the fact, political historians have tended to assume that outcomes that would have been seen as highly improbable before they occurred (rise of the West, negotiated settlement of the Cuban missile crisis, fall of the Soviet Union) are seen as inevitable after they happened. To develop critical alternatives to established historiographical questions on such topics, this typical, three-stage pattern of development is helpful: a) an early open stage showing initial unpredictability of outcomes, followed by b) a growing inflexibility as developments lock in one trajectory, and c) closure locking in suboptimal arrangements.[12]

These stages parallel the stages of Harry Collins’ empirical programme of relativism (EMPOR), which identified three stages for analysis of a scientific controversy, with the approach extended to technology by the social construction of technology (SCOT). First, the analyst focuses on the interpretive flexibility associated with the open character of interpreting data or designing a technology. Second, the program focuses on the causes of closure, where the range of interpretations or design options narrow. Third, the analyst focuses on the role of the wider social and political context in shaping outcomes.[13] This approach helps delineate how certain suboptimal outcomes in modern science and technology came about – especially when the analysis incorporates Collins’ argument that expert core sets that are artificially constrained can lead to premature closure by ignoring qualified experts from different fields.[14] This provides a way to develop a model of suboptimal outcomes in technological developments as well, along the lines of Diane Vaughan’s influential treatment of the “normalization of deviance” leading to the Challenger launch explosion.[15]

Indeed, the minority view that there was no safe level did achieve scientific support in the study of radiation and carcinogenic substances. However, the dominant paradigm of understanding risk by identifying safe and unsafe levels won out in the American chemical industry, and can be seen as a suboptimal outcome in the sense that closure proceeded by regulatory adoption of one side of a scientific debate as the result of corporate pressure to maintain economically important chemicals in production. In essence, a premature closure of scientific debate (and resulting chemical design desiderata) was effected, as the result of stage three economic and regulatory influence. Thus, even as we can see the moment of contingency that made an alternative approach to chemical synthesis and production possible, we can also identify those further causes that helped ensure that the outcome occurred as it did. The result is to see more clearly just how strongly the economic interests of chemical manufacturers shaped modern chemistry and chemical engineering.

De Minimus and Per Se Standards

Toxicologists distinguish two approaches to regulating chemicals: a de minimus standard that identifies a threshold below which chemicals are presumed safe and a per se standard where no safe level is defined for chemicals found to be toxic. With some important qualifications to be discussed, toxicology adopted a de minimus standard, an approach that was solidified from a regulatory point of view by the 1958 Federal Food, Drug, and Cosmetics Act. The approach reflects both industry interest in defending safe levels of chemicals deemed economically essential, as well as disciplinary closure of scientific controversy in toxicology despite continued objections to the de minimus standard by outside experts in two fields: cancer researchers studying the effects of chemical substances, especially plastics and pesticides, and geneticists researching low-dose radiation in nuclear fallout.

Fig. 2: Representative James Delaney chaired the U.S. House of Representatives Select Committee to Investigate Chemicals in Food Production beginning in 1950. His efforts to regulate consumer exposure to pesticides and plastics led to the 1958 Federal Food, Drug, and Cosmetics Act, which established a de minimus standard for regulating most chemicals, with carcinogens subject to a per se standard. By US Government Printing Office [public domain], via Wikimedia Commons

Carcinogenesis and the Per Se Standard

The scientific background for the Delaney amendment was shaped by the testimony of cancer researchers, especially Wilhelm Hueper, a significant influence on Rachel Carson and the director of the Environmental Cancer Section of the National Cancer Institute. His testimony reflected research practices and questions distinct from those in toxicology. Whereas animal experiments in toxicology proceeded by large doses and examination of acute effects, with identification of safe levels on that basis, Hueper described research on the effects of synthetic estrogens at levels judged non-toxic that nevertheless led to cancer. He also argued for the significance of exposure during fetal development, anticipating the approach of Theo Colburn and proponents of the endocrine disruption hypothesis.[17]

High-dose experiments facilitated speedy identification of toxic substances, but extrapolating to lower doses was fraught with difficulty. Extrapolating from high dose experiments while assuming a linear dose-response curve would imply no threshold of absolute safety. Toxicologists sought to define safe levels of economically important chemicals using a model where linear effects begin after a threshold, in effect blocking consideration of low-dose effects. In a situation where cost considerations prohibited reliance upon low-dose, longer-term studies, how to extrapolate from high-dose animal studies reflected political differences over regulatory philosophy.[18] In this context, toxicology’s role as a discipline shaped by the financial support of chemical companies determined the kinds of risks that were considered worthy of research, neglecting environmental causes of cancer and focusing on acute effects of significant doses in order to define safe levels of workplace exposure.[19]

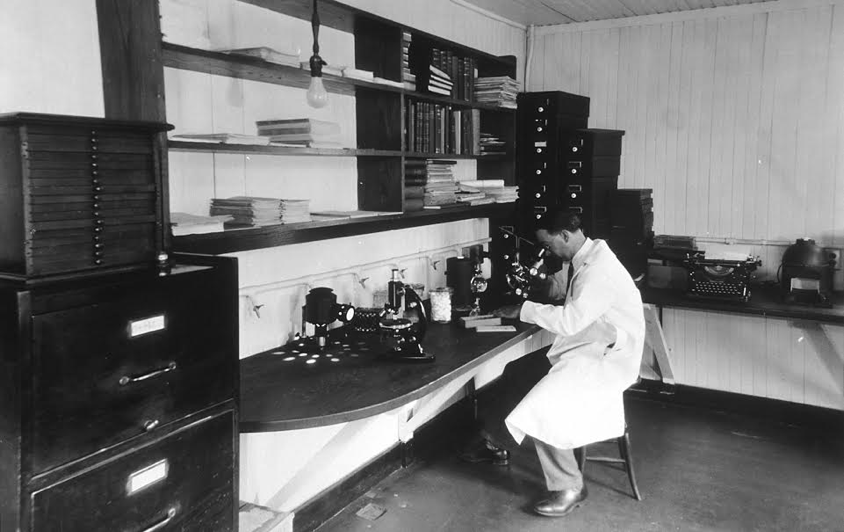

Fig. 3: Wilhelm Hueper, director of the Environmental Cancer Section of the National Cancer Institute from 1938 to 1964, shown here at the Central Cancer Research Laboratory. This image was released by the National Cancer Institute, part of the National Institutes of Health, with the ID 1858. By unknown photographer [public domain], via Wikimedia Commons

After leaving Du Pont, Heuper continued his research at a pharmaceutical firm and published his Occupational Tumors and Allied Diseases in 1942 before being hired by the NCI to direct its new Environmental Cancer Section. While ostensibly free to conduct research, he encountered frequent restrictions on publication and travel, with carbon copies of his papers submitted surreptitiously to Du Pont for commentary before review. He was forced to withdraw a presentation on his research on Colorado uranium miners before the Colorado State Medical Society (CSMS) in 1952 when Atomic Energy Commission (AEC) oversight led to its prohibition. Shields Warren, the AEC’s director of biology and medicine asked the NCI to fire Heuper after he circulated the paper to the CSMS President. While not fired, he was subsequently banned from cancer research on humans.[22]

Even though Heuper was influential in securing legislation putting forth a per se standard for carcinogens in the Delaney amendment, in practice, regulatory capture of the FDA process for implementing the Delaney agreement took place. Jerome Heckman, a lawyer for the Society of the Plastics Industry, argued that when quantitative evidence of significant contamination of food by polymers in packaging was lacking, such chemicals did not constitute a food additive under the law. In effect, Heckman was able to turn the Delaney per se standard into a de minimus standard, while grandfathering chemicals already in use and streamlining the regulatory approval process.[23] The Manufacturing Chemists’ Association (MCA) and other manufacturing associations produced doubt about claims of harm under the guise of “trade association science,” while corporate literature reviews in toxicology routinely ignored evidence of carcinogenicity.[24] Funded by industry and using experimental techniques that blocked consideration of low-level effects, toxicology could itself be seen as a scientific field captured by industry (call it “disciplinary capture”).

The Fallout Debate and the Linear No-Threshold Model

During the height of atmospheric nuclear weapons testing in the 1950s, public debate on the effects of radioactive fallout informed debates about chemical toxicity as well. Rachel Carson drew on the invisible and insidious character of strontium-90, its bioaccumulation in the food chain, and public doubts about governmental forthrightness to frame her discussion of the dangers of pesticides.[25] Debate about whether nuclear fallout presented a real danger to the U.S. public split along disciplinary lines. Among geneticists, the idea that genetic damage from radiation increased linearly without a threshold (the Linear No-Threshold Model or LNT) dominated, while most physicians involved in the nuclear fallout debate held that low-level fallout was safe.[26] Physicians were concerned that public dissemination of the no-threshold model would cause public panic and make medical use of radiation impossible. Hermann Muller’s demonstration in 1927 of radiation-induced genetic mutations led him to express caution on the use of X-rays for medical therapies and provided the foundation for the linear model. Since that time, geneticists have complained that the implications of the LNT model were ignored by medical practitioners and regulators. Just as with the study of carcinogens, studies of long-term chronic exposure to radiation were neglected in favor of focusing on acute effects.[27]

Evidence is strong of neglect and suppression of the geneticists’ position, which called for a precautionary approach based upon emerging understanding of the role of radiation in inducing genetic damage (and possibly cancer). Geneticists also recognized that scientific demonstration of the exact level of harm for human populations was not provable by current methods. Geneticists were largely excluded from the Manhattan Project’s assessment of radiation risk, where physicians, physicists, and radiologists took a toxicological approach focused on immediate damage produced above a threshold.[28] The Manhattan Project did fund studies by geneticists at the University of Rochester. Both Curt Stern’s research on fruit flies and Donald R. Charles’ on mice ultimately supported the linear interpretation, though an official Manhattan Project retrospective implied that Stern’s research had demonstrated the existence of a threshold. The Los Alamos Scientific Laboratory’s 1950 publication, The Effects of Atomic Weapons, ignored these studies altogether.[29]

The consensus among geneticists following World War Two, and particularly during the “fallout debate” about atmospheric nuclear testing in the 1950s, was that each additional amount of exposure to radiation brought additional mutations, so that the introduction of any new sources of radiation exposure constituted a significant hazard. By contrast, physicians and AEC regulators compared new exposures to naturally occurring background radiation in order to judge new exposures as negligible.[30] These contrasting views on linearity and thresholds were reflected in differing assessments of radiation by the Genetics Panel and the Pathology Panel of the 1956 National Academy of Sciences (NAS) Committee of the Biological Effects of Atomic Radiation (BEAR).[31] In 1955, Muller’s paper on the genetic risks of radiation, developed for the United Nations International Conference on the Peaceful Uses of Atomic Energy, which promoted Eisenhower’s “Atoms for Peace” program, was rejected by the AEC because of doubts about Muller’s political loyalty to the U.S. and his public airing of fallout dangers—the paper discussed radiation damage received by victims of the Hiroshima and Nagasaki bombs and supported linearity.[32]

Later, in a parallel case to Heuper’s of significant scientific work that was suppressed by governmental agencies, the AEC cut funding and suppressed John Gofman’s efforts at the Lawrence Livermore National Library (LLNL) to extend the geneticists’ LNT model for low-dose radiation from genetic damage to somatic effects, particularly long-term damage leading to cancer.[33] The concept of a tolerance dose had become accepted by the 1930s and identified thresholds of radiation exposure above which somatic damage, such as reddening of skin, would take place.[34] Thresholds for somatic effects of radiation were endorsed by the NAS BEAR committee in 1959 and were widely accepted through the 1960s. Gofman reported chromosomal alterations in cancer cells in 1967, supporting the hypothesis that cancer was caused by genetic damage to somatic cells. By 1969, he had presented evidence that low-dose radiation could induce chromosomal damage.[35] The AEC pressured LLNL to rein in Gofman after he argued that Federal Radiation Council (FRC) standards were ten times higher than a precautionary approach would require. Gofman’s funding was cut, he was threatened with dismissal, and prepublication approval was required.[36] Gofman’s no-threshold approach to cancer did finally make it into the 1972 NAS Biological Effects of Ionizing Radiation (BEIR) report, but the ensuing public controversy ended his research.[37]

The LNT model finally gained traction as a way to estimate radiation risk quantitatively, but regulation of low-dose exposure remains elusive. In regulating radiation exposure, the AEC (and its successor the Nuclear Regulatory Commission (NRC)) finally adopted the LNT model along with vague language that exposure should be “as low as practicable” to encourage nuclear plant designers to lessen exposures below formal threshold levels.[38] The ascendency of the LNT model reflected a compromise between realist and instrumentalist interpretations of the model, as the 1972 NAS BEIR report argued that the model represented “the only workable approach to numerical estimation of the risk in a population” despite the fact that the model might overstate low-level risks and neglect the existence of cell-repair mechanisms.[39]

Nonetheless, in contrast to threshold models, all levels of radiation exposure needed to be balanced against specific benefits. At the same time, the document incorporated the view of critics who felt that risks of nuclear emissions were being exaggerated compared to energy sources such as coal.[40] A developed, if contested, alternative to the threshold model had emerged for handling radiation exposure, albeit within a technological milieu where increased risk was seen as unavoidable and rejection of the technology as unacceptable. Goffman, however, would go on to question publicly whether nuclear power was worth the risks.

Conclusion

It is certainly possible that no-threshold approaches might be suitable for some areas of study, like carcinogenic chemicals and low-dose radiation, while being inappropriate for toxicology more generally. However, the way these arguments played out, different extrapolations from high-dose animal experiments reflected different political assumptions about risk.[41] Toxicology’s emphasis on threshold harms was used as a shield to prevent further investigation of low-dose harms, as well as to focus attention on acute harms rather than long-term effects. The no-threshold approach was implemented in the Delaney amendment, but was quickly rendered moot by regulatory capture, as pressure from the chemical industry shaped the FDA’s interpretation of the amendment.

The most significant outcome of the threshold model was that industrial chemicals, synthetic pesticides, and plastics used in consumer goods continued to be developed and manufactured even where evidence of toxicity existed. A further investigation of a second-order counterfactual would be necessary to judge the extent to which substitute chemicals or alternative manufacturing processes could have been found had regulatory intervention followed a more preemptive approach. Moreover, even given greater acceptance of the no-threshold approach within the scientific community, corporate pressure would likely have continued to prevent a significant shift in the type of chemicals manufactured, at least absent a larger environmental movement to challenge business as usual. What counterfactual analysis does reveal is that serious challenges to business as usual did occur. But those challenges were overwhelmed by the singular importance granted to industrial chemicals and pharmaceutical products under the reigning American political economy of the day. Lack of knowledge of harms alone did not result in the proliferation of toxic chemicals. That dangerous history resulted from the power of corporate interests in shaping the kinds of chemicals that would be developed, as well as the methods to evaluate and regulate their safety.

Sources

Anastas, Paul T. and John C. Warner, Green Chemistry: Theory and Practice. Oxford: Oxford University Press, 1998.

Brown, John K. “A Different Counterfactual Perspective on the Eads Bridge.” Technology’s Stories, 1, no. 3 (2014), http://www.historyoftechnology.org/tech_stories.

Brown, John K. “Not the Eads Bridge: An Exploration of Counterfactual History of Technology.” Technology and Culture, 55, no. 3 (2014): 521-59.

Carlson, Elof Axel. Genes, Radiation, and Society: The Life and Work of H. J. Muller. Ithaca, N.Y.: Cornell University Press, 1981.

Carson, Rachel. Silent Spring. New York: Fawcett Crest, 1962.

Cavers, David F. “The Food, Drug, and Cosmetic Act of 1938: Its Legislative History and Its Substantive Provisions.” Law and Contemporary Problems, 6, no. 1 (1939): 2-42.

Collins, H. M. “Public Experiments and Displays of Virtuosity: The Core-Set Revisited.” Social Studies of Science, 18 (1988): 725-48.

Collins, H. M. “Stages in the Empirical Programme of Relativism.” Social Studies of Science, 11, no. 1 (1981): 3-10.

Dear, Peter. Discipline and Experience: The Mathematical Way in the Scientific Revolution. Chicago: University of Chicago Press, 1995.

Elster, Jon. Logic and Society: Contradictions and Possible Worlds. New York: Wiley, 1979.

Jolly, J. Christopher. Thresholds of Uncertainty: Radiation and Responsibility in the Fallout Controversy. PhD dissertation, Oregon State University, 2003.

Jones, Geoffrey and Christina Lubinski. “Historical Origins of Environment Sustainability in the German Chemical Industry, 1950s-1980s.” Working Paper, Harvard Business School, August 30, 2013.

Kathren, Ronald L. “Historical Development of the Linear Nonthreshold Dose-Response Model as Applied to Radiation.” Pierce Law Review, 1, no. 1/2 (2003): 5-30.

Lebow, Richard Ned. Forbidden Fruit: Counterfactuals and International Relations. Princeton, N.J.: Princeton University Press, 2010.

Lebow, Richard Ned and Janice Gross Stein. We All Lost the Cold War. Princeton, N.J.: Princeton University Press, 1994.

Lutts, Ralph H. “Chemical Fallout: Rachel Carson’s Silent Spring, Radioactive Fallout, and the Environmental Movement.” Environmental Review, 9, no. 3 (1985): 210-25.

Lynch, William T. “Arguments for a Non-Whiggish Hindsight: Counterfactuals and the Sociology of Knowledge.” Social Epistemology, 3, no. 4 (1989): 361-65.

Lynch, William T. “King’s Evidence.” New Scientist, Aug. 20, 2005, 39-40.

Lynch, William T. “Second-Guessing Scientists and Engineers: Post Hoc Criticism and the Reform of Practice in Green Chemistry and Engineering,” Science and Engineering Ethics, Sept. 14, 2014, doi: 10.1007/s11948-014-9585-1.

Oreskes, Naomi, and Erik M. Conway. Merchants of Doubt: How a Handful of Scientists Obstructed the Truth on Issues from Tobacco Smoke to Global Warming. New York: Bloomsbury Press, 2010.

Pinch, Trevor J. and Wiebe E. Bijker. “The Social Construction of Facts and Artifacts: Or How the Sociology of Science and the Sociology of Technology Might Benefit Each Other.” In The Social Construction of Technological Systems: New Directions in the Sociology and History of Technology, edited by Wiebe E. Bijker, Thomas P. Hughes, and Trevor J. Pinch, 11-44. Cambridge, Mass.: MIT Press, 2012.

Proctor, Robert N. Cancer Wars: How Politics Shapes What We Know and Don’t Know About Cancer. New York: Basic Books, 1995.

Ross, Benjamin and Steven Amter. The Polluters: The Making of Our Chemically Altered Environment. Oxford: Oxford University Press, 2010.

Schatzberg, Eric. “Counterfactual History and the History of Technology.” Technology’s Stories, 1, no. 3 (2014), http://www.historyoftechnology.org/tech_stories.

Semendeferi, Ioanna. “Legitimating a Nuclear Critic: John Gofman, Radiation Safety, and Cancer Risks.” Historical Studies in the Natural Sciences, 38, no. 2 (2008): 259-301.

Tetlock, Philip E., Richard Ned Lebow, and Geoffrey Parker, eds. “What-If?” Scenarios That Rewrite World History. Ann Arbor, Mich.: University of Michigan Press, 2006.

Tetlock, Philip E. and Geoffrey Parker, “Counterfactual Thought Experiments: Why We Can’t Live without Them and How We Must Learn to Live with Them.” In “What-If?” Scenarios That Rewrite World History, edited by Philip E. Tetlock, Richard Ned Lebow, and Geoffrey Parker, 14-44. Ann Arbor, Mich.: University of Michigan Press, 2006.

Vaughan, Diane. The Challenger Launch Decision: Risky Technology, Culture, and Deviance at NASA. Chicago: University of Chicago Press, 1996.

Vinsel, Lee. “The Value of Counterfactual Analysis: Investigating Social and Technological Structure.” Technology’s Stories, 1, no. 3 (2014), http://www.historyoftechnology.org/tech_stories.

Vogel, Sarah A. Is It Safe?: BPA and the Struggle to Define the Safety of Chemicals. Berkeley: University of California Press, 2013.

Walker, J. Samuel. Permissible Dose: A History of Radiation Protection in the Twentieth Century. Berkeley: University of California Press, 2000.

Wax, Paul M. “Elixirs, Diluents, and the Passage of the 1938 Federal Food, Drug and Cosmetic Act.” Annals of Internal Medicine, 122, no. 6 (1995): 456-61.

Woodhouse, Edward J. “Change of State? The Greening of Chemistry.” In Synthetic Planet: Chemical Politics and the Hazards of Modern Life, edited by Monica J. Caspar, 17

* History Department, 656 W. Kirby, Wayne State University, Detroit MI 48202.

I am grateful to Jack Brown and Suzanne Moon for helpful suggestions.

[1] Benjamin Ross and Steven Amter, The Polluters, 3-5, 17-21, 25.

[2] Ibid., 10-16, 21-27.

[3] Sarah A. Vogel, Is It Safe?, 34-35; Ross and Amter, The Polluters, 34.

[4] Paul M. Wax, “Federal Food, Drug and Cosmetic Act”; Vogel, Is it Safe?, 20-22, 34-35; David F. Cavers, “Food, Drug, and Cosmetic Act,” 27.

[5] Paul T. Anastas and John C. Warner, Green Chemistry, 17-18. Other bad choices Anastas and Warner discuss include the neglect of synergistic effects and the failure to provide a full-cost accounting of the life-cycle of a chemical.

[6] Edward J. Woodhouse, “Change of State.”

[7] John K. Brown, “Not the Eads Bridge”; Brown, “A Different Counterfactual Perspective”; Eric Shatzberg, “Counterfactual History”; Lee Vinsel, “Value of Counterfactual Analysis.”

[8] Philip E. Tetlock and Geoffrey Parker, “Counterfactual Thought Experiments”; Jon Elster, Logic and Society.

[9] William T. Lynch, “Non-Whiggish Hindsight”; William T. Lynch, “King’s Evidence”; Peter Dear, Discipline and Experience.

[10] But see Brown, “A Different Counterfactual Perspective.”

[11] Comparative history suggests that the strength of political opposition to chemical pollution plays a role. Geoffrey Jones and Christina Lubinski, “Historical Origins of Environmental Sustainability,” 19-20, 25-28. 36-37, argue that, until the 1970s, German chemical companies Bayer and Henkel resisted regulation just as much as U.S. chemical companies. At that time, the emergence of a politically successful Green Party and environmental protests directed at each company’s headquarters, where the firms were susceptible to pressure as the result of stronger regional ties than U.S. corporations possessed, led to a more proactive and precautionary approach.

[12] Tetlock and Parker, “Counterfactual Thought Experiments”; Tetlock, Lebow, and Parker, Unmaking the West; Richard Ned Lebow, Forbidden Fruit; Richard Ned Lebow and Janice Gross Stein, We All Lost the Cold War.

[13] H. M. Collins, “Empirical Programme”; Trevor J. Pinch and Weibe E. Bijker, “Social Construction.”

[14] H. M. Collins, “Public Experiments,” 739-42.

[15] Diane Vaughan, Challenger Launch Decision; William T. Lynch, “Second-Guessing Engineers.”

[16] Vogel, Is It Safe?, ch. 1.

[17] Ibid., 24-30.

[18] Robert N. Proctor, Cancer Wars, ch. 7.

[19] Vogel, Is It Safe?, 7-9, 94, 201-202.

[20] Ibid., 26-30.

[21] Proctor, Cancer Wars, 39-41.

[22] Ibid., 44-45.

[23] Vogel, Is it Safe?, 138-42, 1-3.

[24] Proctor, Cancer Wars, 125-32; Naomi Oreskes and Erik M. Conway, Merchants of Doubt; Vogel, Is It Safe?, 38.

[25] Rachel Carson, Silent Spring, 5-7; Ralph H. Lutts, “Chemical Fallout.”

[26] J. Christopher Jolly, Thresholds of Uncertainty; Ronald L. Kathren, “Historical Development.”

[27] Jolly, Thresholds of Uncertainty, 7, 50, 217-41, 76-82.

[28] Ibid., 229.

[29] Ibid., 78-83, 106-107.

[30] J. Samuel Walker, Permissible Dose, 10-11; Jolly, Thresholds of Uncertainty, 162-266.

[31] Jolly, Thresholds of Uncertainty, 314-454.

[32] Elof Axel Carlson, Genes, Radiation, and Society, 356-67; Jolly, Thresholds of Uncertainty, 253-66.

[33] Ioanna Semendeferi, “Legitimating a Nuclear Critic,” 259-65.

[34] Walker, Permissible Dose, 2-10; Barton C. Hacker, Dragon’s Tail, 14-19; Kathren, “Historical Development,” 11-12.

[35] Semendeferi, “Legitimating a Nuclear Critic,” 263-65, 267-69.

[36] Ibid., 271-73, 277-78.

[37] Ibid., 297, 278; Walker, Permissible Dose, 47-51.

[38] Walker, Permissible Dose, 31-35, 44-56.

[39] Quoted in ibid., 49. See also Kathren, “Historical Development,” 14, who notes that linear extrapolation “was really a statement of the upper level of risk in the low dose region of the dose-response curve—the very region of interest from a protection standpoint and the very region in which dose-response data were not available.”

[40] Walker, Permissible Dose, 50.

[41] Proctor, Cancer Wars, ch. 7.