The Human as a System Component in Nuclear Installations: Jens Rasmussen and High-Risk Systems, 1961–1983

Technology’s Stories vol. 4, no. 1 – doi: 10.15763/JOU.TS.2016.6.1.03

PDF: Kant_Human as System Component

In 1976, the Danish engineer, Jens Rasmussen[1], was well aware that his approach to studying human performance in high-risk technological contexts was breaking new ground. He commented wryly,

“When entering a study of human performance in real-life tasks one rapidly finds oneself ‘rushing in where angels fear to tread’. It turns out to be a truly interdisciplinary study for which an acceptable frame of reference has not yet been established, and interaction between rather general hypothesis, tests, methods, and detailed analysis is necessary.”[2]

This comment was not unwarranted. In the 1960s, the traditional view of engineering related to high-risk nuclear systems involved treating the problem of systems reliability strictly in technical terms. In contrast, Rasmussen recognized that the human operator had to be addressed together with the technological components as a unified construct.[3] Essentially, the challenge that Rasmussen faced was as follows: how to characterize the human operator as a part of the high-risk nuclear system without reducing the person to a mechanistic cog, while ensuring that the overall system functioned correctly without any accidents.

Following Rasmussen’s approach requires a special emphasis on the material dimension of the human as a component of high-risk systems. Significantly, Rasmussen’s approach highlights three main interrelated aspects pertaining to the assimilation of the human as a system component (materiality of risk) between the years 1961–1983.[4] First, the redefinition of the problem of reliability to include the human; second, formulation of the concept of the human in technical terms; and finally, the characterization of human performance in light of the above two aspects.

Technical reliability refers to the ability of components, machines, or systems to function consistently in the manner for which they were designed. Traditionally, the problem of reliability during system operation was treated in terms of its technical aspects. However, technical design cannot address all unanticipated faults in the system. First, the designer may not be able to fully take stock of all combinations of rare emergent faults. Second, it may also not be economically feasible to provide extra equipment for very rare faults. Therefore, in contrast to a technical component, the flexible human operator could provide a decisive advantage in terms of decision making for handling rare, abnormal situations. However, introducing the operator as a system component has its drawbacks. The human is flexible but at times also prone to slips and mistakes:

“[…] the human element is the imp of the system. His inventiveness makes it impossible to predict the effects of his actions when he makes errors, and it is impossible to predict his reaction in a sequence of accidental events, as he very probably misinterprets an unfamiliar situation.”[5]

Therefore, to ensure overall reliability, Rasmussen introduced the operator as a system component, taking into account its advantages and drawbacks.

In conceptualizing the human as a material aspect of the system, two themes emerged as prominent in Rasmussen’s approach. First, he did not reduce the human to a mechanistic component while still ensuring the operator was functioning as part of the technology loop; i.e., the operator should feel like a responsible member decision making member and not just a cog in the process. Thus, the human was construed a system component while the technical system was the human’s environment.[6] Second, Rasmussen adopted an engineering solution to the problem while supporting the operator as a systems component. Rasmussen recognized that the views from physiology, psychology and other related sciences and philosophies are important but not directly amenable to systems design. Therefore, in light of systems design as well as viewpoints from other disciplines, he construed a model of the human operator as a systems component.

Along with the engineering dimension of the problem, Rasmussen was influenced by a range of philosophers (such as H. Dreyfus, A. Sloman); neuroscientists (such as J.V. Lettvin, D. Hubel, W.S. McCulloch); physiologists (such as P. Weiss); and psychologists (such as K. Pribram, J. Bruner), among many other theorists. In particular, Rasmussen gained one conceptualization of the operator from French novelist Xavier De Maistre’s writings about human nature from his book, Voyage autour de ma chambre that clearly demonstrated the tension between the flexibility and fallibility of humans.[7] De Maistre viewed human nature as a ‘système de l’ âme et de la bête’ — system of soul and beast. The soul commands the beast; however, at times, the beast has its own way of acting. As a result, at times people are not always themselves even in their everyday activity. Clarifying the notion of the system of soul and beast in the text, literary theorist David McCallum[8] notes:

“In the Voyage autour de ma chambre, the narrator’s ‘âme’ and his ‘bête’ are both forms of spirit, of intelligence. Theirs is a distinction of quality, not of nature […] Unlike the solitary lofty soul, the beast is a massy, social mind negotiating everyday interactions in the material world.”.

Even though the soul and beast are a conjoined system, they have a difficult relationship. Often, the beast’s continual acting, without the supervision of the soul, can lead to errors and danger. To illustrate this danger De Maistre describes a situation in which he was using tongs to toast his bread.[9] As this event unfolded, unbeknown to De Maistre, a flaming log fell on the tongs and heated it up. In other words, De Maistre writes that his soul had wandered off thinking and was not controlling his beast during this time. This led the beast to act inadvertently on its own. As a creature of habit, the beast made De Maistre set his hand on the hot tongs to take the bread and in the process got burnt. This accident displays that often in everyday life there are slips and lapses in human activity that lead people to harm. These slips and lapses can be accounted for by addressing the fundamental basis of human nature.

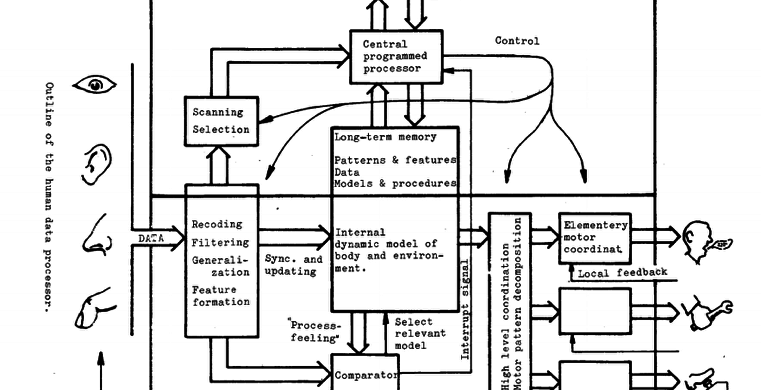

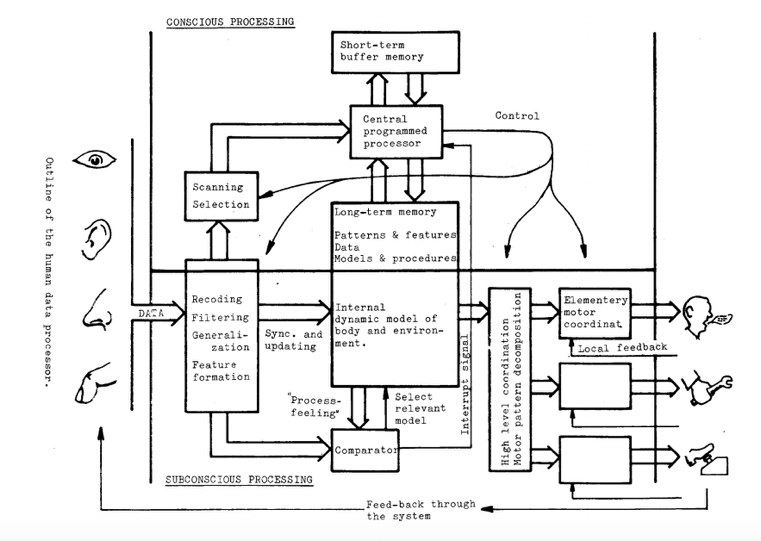

Similar to De Maistre, Rasmussen also observed this insight about fallibility in everyday activity in his research with human operators. Rasmussen recognized that in everyday activities, operators get accustomed to their tasks – they get adapted to their circumstances. This adaptation often leads them to subconsciously miss steps in their procedures that may lead the system towards malfunctions. The challenge for the engineer was to provide solutions that will avert malfunctions without penalizing the operator for their missed steps and slips. Averting harmful situations caused by slips and mistakes required formulating a model of the human operator that would support such a conception of human activity. Not surprisingly, De Maistre’s system of soul and beast forms the key basis for understanding the material dimensions of Rasmussen’s formulation of the dual integrated subsystems of the human operator. Akin to the soul and beast, Rasmussen’s human operator has two subsystems — conscious and subconscious (see Figure). In Rasmussen’s human operator, the first subsystem is a subconscious processing unit that allows for the synchronization of the body with the surroundings, i.e. for ongoing fast control. In contrast, the conscious subsystem is depicted as having limited speed with its primary task in data management and handling. Further, in the case of unique situations, the conscious subsystem is called upon to conduct “improvisations, rational deductions; and symbolic reasoning.”[10] At times, it also coordinates and controls the subconscious subsystem. The two subsystems together form the model of the operator as a system component in order to account for reasonable behavior as well as slips and lapses.

Figure 1. Model of human processor, Rasmussen, Bits, p.9. Notice the two subsystems of conscious and subconscious processing similar to De Maistre’s ‘système de l’ âme et de la bête’. © Jens Rasmussen. Permission has been received from the author to reproduce the figure

In addition to the model of the human operator, Rasmussen addressed the performance of the operator from the late 1960s to mid-1980s. From studies of operator performance, Rasmussen found that operators often jump between the concepts they mentally hold while diagnosing the functioning of the technical system. These leaps are informed by a generalized “process feel” that the operators have towards the nuclear process plant functioning. Thus, a prominent need was to comprehend broader ways of recognizing human activity in technological contexts. To characterize the material dimension of human activity, Rasmussen introduced taxonomies of human error as well as categories of human performance suited for systems design based on verbal protocol studies, as well as examination of accident reports.[11] Similar to the human operator model, the categories of human performance were not an application of theories from psychological science.[12] Rather, Rasmussen used insights from the philosophers Ernst Cassirer and Alfred North Whitehead (among many other theorists) to emphasize that human performance in technical contexts requires three broad categories to be comprehended holistically: skill-based, rule-based and knowledge-based.[13] Skill-based performance addresses sensory-motor behavior which is automatic and without conscious control. Rule-based behavior is based on stored procedures and heuristics gained from experience. Knowledge-based behavior is based on explicit thinking and planning in unfamiliar situations.

Along with the two subsystems of the human processor, these three categories accounted for the material aspects of the behavior of the human operator in technical systems, along with the possibilities of malfunction. Averting systemic malfunctions required designing technology to support human performance at all the three levels of performance in accordance with the basic human processor model. This view of the human and operator performance was not captured by the existing views of engineering or scientific approaches, leading Rasmussen to wryly comment that he was rushing into areas where “angels fear to tread”.[14]

In contrast to traditional engineering thinking of the 1960s, Rasmussen formulated an alternative model of the operator as well as characterized human performance in technological contexts – emphasizing the material aspects of risk. In providing this solution, Rasmussen ensured that the human operator was comprehensively encapsulated as a part of the system without being reduced to a mechanistic cog: the operator was a system component while the system served as the operator’s technological context.

Bibliography

De Maistre, Xavier. A Journey Around My Room. Translated by Henry Attwell, New York, NY: Hurd and Houghton, 1871. https://archive.org/details/journeyroundmyro00maisrich (Originally published in 1794).

Jensen, Å, J Rasmussen, and P Timmermann. “Analysis of Failure Data for Electronic Equipment at Risø,” (1963). Risø Report No. 38, September.

Kant, Vivek. “Supporting the Human Life-Raft in Confronting the Juggernaut of Technology: Jens Rasmussen, 1961–1986.” Applied Ergonomics, (in press). doi: http://dx.doi.org/10.1016/j.apergo.2016.01.016.

McCallam, David. “Xavier De Maistre and Angelology.” In (Re-)Writing the Radical: Enlightenment, Revolution and Cultural Transfer in 1790s Germany, Britain and France, edited by Maike Oergel, 239–50, Berlin: De Gruyter, 2012.

Nielsen, Henry, Keld Nielsen, Flemming Petersen, and Hans Siggaard. “Risø and the Attempts to Introduce Nuclear Power Into Denmark.” Centaurus 41 (1-2). 64–92. (1999). doi:10.1111/j.1600-0498.1999.tb00275.x..

Nielsen, Henry, and Henrik Knudsen. “The Troublesome Life of Peaceful Atoms in Denmark.” History and Technology 26 (2). 91–118. (2010). doi:10.1080/07341511003750022.

Pedersen, O. M., & Rasmussen, J. Mechanisms of human malfunction definition of categories: Revisions and examples. Risø-Elek-N; No. 27. (1980).

Rasmussen, J. “Man-Machine Communication in the Light of Accident Record”. Presented at International Symposium on Man-Machine Systems, Cambridge, (September 8-12, 1969). In: IEEE Conference Records, No. 69 (58-MMS. Vol. 3).

Rasmussen, J. “Man as Information Receiver in Diagnostic Tasks”. In: Proceedings of IEE Conference on Displays, IEE Conference Publication No. 80, Loughborough, (September, 1971), 271-276.

Rasmussen, J. “ The Role of the Man-Machine Interface in Systems Reliability”, Risø-M-1673, (1973).

Rasmussen, Jens. “Outlines of a Hybrid Model of the Process Plant Operator.” In Monitoring Behavior and Supervisory Control, edited by Thomas B Sheridan and Gunnar Johannsen, 371–83, Boston, MA: Springer US, 1976. doi:10.1007/978-1-4684-2523-9_31.

Rasmussen, J. “On the Structure of Knowledge – a Morphology of Mental Models in a Man-Machine System Context”, (1979). Risø-M-2192.

Rasmussen, J. “The Role of Cognitive Models of Operators in the Design, Operation and Licensing of Nuclear Power Plants”. Workshop on Cognitive Modelling of Nuclear Plant Control Room Operators, Dedham, Massachusetts, (August 1982), 13-35. In: NUREG/CR-3114, ORNL/TN-8614. Oak Ridge National Laboratory. Accessed from: http://web.ornl.gov/info/reports/1982/3445606041672.pdf. (Accessed on: Oct 3rd, 2013).

Rasmussen, J. Skill, Rules and Knowledge; Signals, Signs, and Symbols, and other Distinctions in Human Performance Models. IEEE Transactions on Systems, Man and Cybernetics. SMC-13(3), (1983), 257 – 266.

Rasmussen, J. and Goodstein, L. P. “Experiments on Data Presentation to Process Operators in Diagnostic Tasks”. In: Aspects of Research at Risø. A Collection of Papers dedicated to Professor T. Bjerge on His Seventieth Birthday. Risø Report No. 256, (1972), 69-84.

Rasmussen, J. and Pedersen, O. M., Notes on Human Error Classification Comparing the NRC GENCLASS Taxonomy with the Experiments at Risø. N-5-80. (1980).

Rasmussen, J., Pedersen,O. M. , Mancini,G. , Carnino, A. , Griffon, M. and Gagnolet, P. “Classification System for Reporting Events Involving Human Malfunction”, Risø-M-2240, (1981).

Rasmussen J and Taylor, J.R. “Notes on human factors problems in process plant reliability and safety prediction,” Risø Report No. 1894. (1976).

Rasmussen, J. and Timmermann,P. “Safety and Reliability of Reactor Instrumentation with Redundant Instrument Channels”, Risø Report No. 34. (January, 1962.)

Timmermann, P. and Rasmussen, J. “An Attempt to Predict Reliability of Electronic Instruments and Redundant Systems”. In: Reactor Safety and Hazards Evaluation Techniques, Vienna, IAEA, 1962, pp. 433-448.

Sanderson, Penelope M, and Kelly Harwood. “The Skills, Rules and Knowledge Classification: a Discussion of Its Emergence and Nature.” In Tasks, Errors, and Mental Models: a Festschrift to Celebrate the 60th Birthday of Professor Jens Rasmussen, edited by L P Goodstein, H B Andersen, and S E Olsen, London; New York: Taylor & Francis, 1988, 21–34.

Additional Readings

De Maistre, Xavier. A Journey Around My Room. Translated by Henry Attwell, New York, NY: Hurd and Houghton, 1871. https://archive.org/details/journeyroundmyro00maisrich (Originally published in 1794).

Kant, Vivek. “Supporting the Human Life-Raft in Confronting the Juggernaut of Technology: Jens Rasmussen, 1961–1986.” Applied Ergonomics, (in press). doi:http://dx.doi.org/10.1016/j.apergo.2016.01.016.

Le Coze, Jean Christophe. “Reflecting on Jens Rasmussen’s Legacy (2) Behind and Beyond, a ‘Constructivist Turn’.” Applied Ergonomics, (November 2015). doi:10.1016/j.apergo.2015.07.013.

Le Coze, Jean Christophe. “Reflecting on Jens Rasmussen’s Legacy. A Strong Program for a Hard Problem.” Safety Science 71, no. 0 (January 1, 2015): 123–41.

Nielsen, Henry, Keld Nielsen, Flemming Petersen, and Hans Siggaard. “Risø and the Attempts to Introduce Nuclear Power Into Denmark.” Centaurus 41, no. 1 (1999): 64–92. doi:10.1111/j.1600-0498.1999.tb00275.x.

Nielsen, Henry, and Henrik Knudsen. “The Troublesome Life of Peaceful Atoms in Denmark.” History and Technology 26, no. 2 (June 2010): 91–118. doi:10.1080/07341511003750022.

Acknowledgements

I thank my teacher Scott Campbell for providing valuable comments to a previous draft of this document. Part of the research for this paper was supported by The Natural Sciences and Engineering Research Council of Canada (NSERC) Discovery grant # 132995 awarded to Catherine Burns. I thank Catherine Burns for the financial support. Special thanks to Henning Boje Andersen for gaining the permission to reproduce pictures from Rasmussen’s papers.

[1] Jens Rasmussen worked at Risø Laboratories, Denmark. Risø was established as a national energy research facility for the peaceful use of nuclear energy in Denmark. Nuclear reactors were initially setup to support Risø’s research agenda. Pertaining to Risø’s atomic agenda, a focal area of research was reactor instrumentation and their reliability, which was addressed by the electronics group at Risø. Jens Rasmussen was a part of this electronics group. Risø Laboratories is now a part of Denmark Technological University. (http://www.dtu.dk/english/About/CAMPUSES/DTU-RISOE-Campus/Brief-history-of-Risoe. Accessed on: May 5, 2016). For broader insights about Risø Laboratories and nuclear energy see, Nielsen et al., Risø and the Attempts; Also, Nielsen et al., Troublesome Life.

[2] Rasmussen, Outlines.

[3] Kant, Supporting the Human; Jensen et al., Analysis of Failure Data; Rasmussen et al., Safety and Reliability; Timmermann et al., Attempt to Predict Reliability.

[4] The dates chosen are not arbitrary. Rasmussen started addressing these challenges posed by technical systems in 1961. In the year 1983 he presented an engineering based categories pertaining to skills, rules and knowledge (SRK) for addressing human performance in high-risk systems. The SRK completed the arc of the human and human performance that was later used by Rasmussen further in his career.

[5] Rasmussen et al., Notes on Human Factors Problems.

[6] Rasmussen, Man-Machine Communication; Rasmussen, Man as Information Receiver; Rasmussen et al., Experiments on Data Presentation; Rasmussen, Role of the Man-Machine Interface.

[7] De Maistre, A Journey Around My Room; Rasmussen, Bits, 1974.

[8] McCallam, Xavier De Maistre and Angelology, 240.

[9] De Maistre, Voyage, Ch.8.

[10] Rasmussen, Bits, 5.

[11] Rasmussen et al., Notes on Human Factors Problems; Rasmussen et al., Notes on Human Error Classification; Pedersen, et al. Mechanisms of human malfunction; Rasmussen et al., Classification System for Reporting Events.

[12] Rasmussen, Structure of Knowledge; Rasmussen, Cognitive Models; Rasmussen, Skill, Rules and Knowledge.

[13] Rasmussen, Skill, Rules and Knowledge, 258. Also see Sanderson et al., Discussion of Its Emergence and Nature, 21–34.

[14] Rasmussen, 1979, Outlines.